Background

Surgical risk stratification tools have existed since the creation of the Cardiac Risk Index in 1977.1 Since then, there has been a proliferation of risk calculators: the three most notable being the Revised Cardiac Risk Index (RCRI), the American College of Surgeons-Surgical Risk Calculator (ACS-SRC), and the Gupta Myocardial Infarction and Cardiac Arrest calculator (MICA).2–4 The American College of Cardiology/American Heart Association (ACC/AHA) recommends a step-wise preoperative risk stratification process, classifying patients as either low risk (<1% risk of major adverse cardiac events, (MACE)) or at elevated risk (≥1% risk of MACE), using either the RCRI, ACS-SRC, or MICA tools, without privileging one above the others.5

The RCRI score, which is the oldest of the current scoring systems, was published in 1999 as an update to the Goldman Risk Index.2 It identifies 6 independent variables to predict 30-day major cardiac complications including myocardial infarction, complete heart block, pulmonary edema, ventricular fibrillation, and cardiac arrest. Strengths include its simplicity, provider familiarity, and external validation in subsequent studies.6–11 Weaknesses include its small derivation (2,893) and validation (1,422) cohorts and older age. Notably, the RCRI was developed before the existence of cardiac troponin assays. In 2017 a meta-analysis updated the RCRI risk output, suggesting that the original RCRI broadly underestimated surgical risk.7 Because the current ACC/AHA guidelines were published in 2014, the findings of the subsequent metanalysis were not incorporated into current guidelines.

The MICA score, released in 2011, was developed using data from the National Surgical Quality Improvement Program (NSQIP).3 Authors deployed multivariate logistic regression to identify 5 independent variables to predict risk of 30-day myocardial infarction or cardiac arrest. Its strengths include large derivation (211,410) and validation cohorts (257,385) and simple inputs. Weaknesses include the fact that providers must know a patient’s American Society of Anesthesiology health classification (not always clear for hospitalized patients requiring urgent surgeries), less external validation, as well as lack of provider familiarity.12

Lastly, the ACS-SRC was first published as a web-based tool in 2013, also using data from the NSQIP database.4 Unlike the other two scoring systems, it is updated on an intermittent basis as new surgical data is received. Its last update included surgical outcomes from 2015-2020. The ACS-SRC includes 21 specific input variables, requires current procedural terminology (CPT) surgical codes, and calculates 14 unique outcomes including risk of death and length of stay. It predicts outcomes for over 117,000 specific surgeries, using information from >800 participating hospitals.

Patients determined by one of these tools to be at elevated risk (≥1% risk of MACE) should be further stratified according to their functional capacity, most commonly via metabolic equivalents (METS).13 If patients have adequate functional capacity (generally able to achieve 4 or more METS), the ACC/AHA recommends no further risk stratification. Although they cannot be directly compared to one another because of disparate end points and outcomes, retrospective studies have shown differences in how risk is stratified by these tools.14–16 The RCRI, for example, has been shown to underestimate risk for vascular surgeries and is believed to be a sub-optimal tool for evaluating these procedures.11,17 Due to the uncertainly regarding the optimal tool for risk stratification, significant heterogeneity likely exists in preoperative risk assessment practices.

Methods

To better understand the degree of uncertainty surrounding choice of surgical risk stratification tools, investigators conducted a retrospective chart review of 200 unique encounters performed by the hospital medicine consult service at a tertiary academic medical center from June 2019 to July 2020. This sample represented approximately 40% of all hospital medicine consults performed from that period. This was done to characterize patterns of risk stratification in hopes of developing more uniform practices. By characterizing these patterns, investigators hoped to elucidate strengths and weaknesses within the hospital medicine consult service and make recommendations about how perioperative consults might be standardized to improve communication and patient outcomes. Standardization and simplicity in consult notes have been shown to increase compliance with recommendations.18,19

Researchers obtained a list of all hospital medicine consult notes from a 12-month period. Because this was a retrospective chart review and not human subjects research, an IRB was not required, although the initial data-pull passed a “Determination of Quality Improvement Status” via the hospital’s Institutional Review Board.

Researchers then manually reviewed 200 randomly selected charts representing approximately 40% of all encounters to determine whether or not a preoperative risk stratification was completed, and if so, which tool was documented. Multiple researchers performed the review with a lead researcher reviewing all charts prior to completion to ensure accuracy. There were no disagreements to resolve.

Results

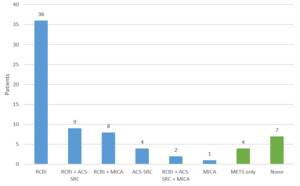

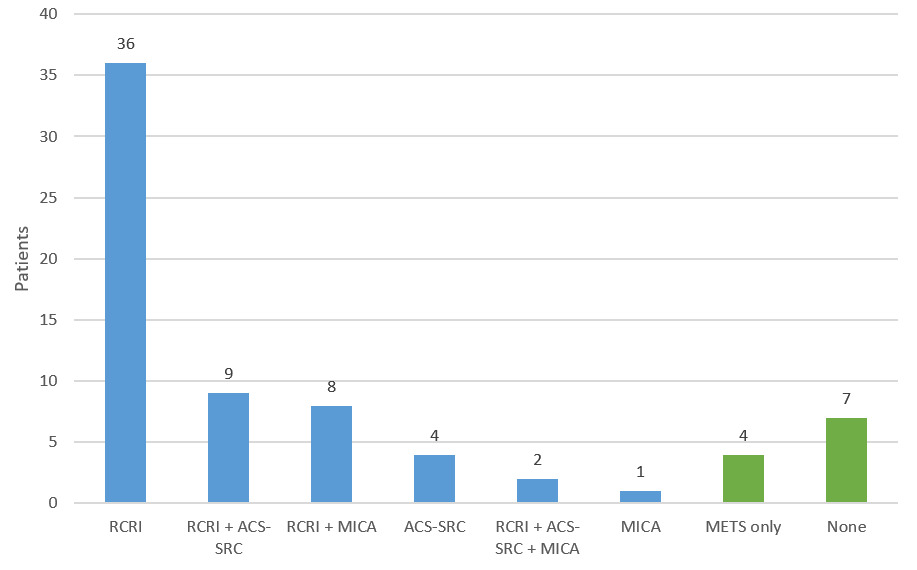

Among the 200 encounters reviewed, there were 71 perioperative risk assessment notes (36%). There was significant variation in how surgical risk was evaluated. 8 distinct approaches were used, employing some combination of RCRI, ACS-SRC, MICA, METS, or no tool at all (Figure 1). Nineteen (27%) notes documented multiple risk stratification tools (excluding METS, as it is intended to be adjunctive). The use of multiple combinations of different tools is the reason why 8 unique approaches were identified. The most commonly used combination of tools was RCRI in conjunction with ACS-SRC (8 notes) followed by RCRI in conjunction with MICA (6 notes). There were 2 instances of all three risk scores being documented. There were no instances of the risk tools giving discordant responses regarding “low” vs “elevated” risk for the charts reviewed.

The most commonly used single approach was the use of RCRI, with or without METS: 36 notes (51%). Among the 71 assessments, there were 11 (15%) that were not consistent with ACC/AHA guidelines in that either there was no formal tool documented (7 patients) or only METS (4 patients). All the patients evaluated by a single tool were found to be low risk, not requiring further characterization of functional capacity/METS in accordance with ACC/AHA guidelines.

Discussion

This single-centered retrospective chart review examining surgical risk stratification practices by the hospital medicine consult service at a tertiary academic medical center revealed significant heterogeneity in documented approaches. The 2014 ACC/AHA guidelines recommend a step-wise approach to surgical risk stratification using 1 of 3 risk stratification tools (RCRI, MICA, and ACS-SRC) without endorsing one specifically. Researchers hypothesized that this might engender uncertainty about which tool to use and lead to a diverse set of documentation practices which was consistent with the results of this analysis.

RCRI was the most popular tool used, found in 55 (77%) of the notes, followed by ACS-SRC used in 15 (21%) notes, and lastly MICA in 11 (15%) notes. There were 8 different approaches documented because physicians often deployed multiple risk stratification tools (27% of notes) for the same patient. This suggests uncertainty on the part of providers as to which tool was best. Multiple studies have shown that the different risk assessment tools can calculate different results for the same patient, which likely contributed to the use of multiple tools to ensure accuracy and completeness.14–16 We hypothesize that because the ACC/AHA guidelines do not reflect the risk output used in the updated 2017 RCRI meta-analysis (which is used in the popular “MDCalc” online tool), providers might use a second risk tool such as the ACS-SRC or MICA to “double check” the older RCRI tool. In this study there was no discordance between “low” and “elevated” risk when multiple risk assessment tools were used for the same patient.

This study has a number of limitations, most notably its small sample size. There were only 200 encounters reviewed and a total of 71 surgical risk assessments included. Additionally, this is a single-center trial occurring at a tertiary academic center with a large hospital medicine department composed of more than 60 providers. It is likely that the relatively large number of providers who rotate through the consult service contributed to the heterogeneity of risk assessment practices. There may more uniformity in the provider pool and thus documentation practices in smaller health systems. This analysis was conceived of as an opportunity to internally review documentation practices as part of an effort to standardize perioperative assessments and improve communication with surgical providers.

In the seminal article “10 Commandments for Effective Consultations,” Goldman and colleagues emphasized that clear and concise communication was an imperative for consultant physicians.20 Goldman urged consultants to meet in-person with primary providers to optimize clarity and enable follow-up questions to be answered. Updated articles have continued to emphasize the importance of clarity and concision in both verbal and written communication on the part of Internal Medicine consultants.21

The ever-expanding and progressively disassociated hospital environment makes in-person communication difficult. Written communication in the form of notes entered into the electronic health record (EHR) have increasingly replaced provider-to-provider handoffs.22 Although there has been much research done evaluating the efficacy of note templates in improving clarity and satisfaction, we are not aware of any study that specifically investigates the use of standardized templates in the setting of medicine consultation.23–27 Given the uncertainty about which surgical-risk stratification tool is optimal and the increasing importance of notes entered into the EHR, we believe further research is needed to determine best practices for documenting surgical risk in a uniform and effective manner. Based upon the results of this study, our hospital medicine consult service has enacted a number of quality improvement initiatives to standardize risk assessment practices, including providing all consult attendings with a handout detailing preferred documentation practices as well as building standardized chart phrases (dot phrases) for both the RCRI and MICA.

Consult attendings are provided with a template detailing how to document risk assessments using both patient and surgery-specific language in hopes of improving uniformity. New EHR dot phrases for both RCRI and MICA enable all providers to clearly identify which risk factors are included in each scoring systems, and in the case of the RCRI, automatically calculates the risk output. ACS-SRC does not have a dot phrase because of its complexity and high number of input variables. Additionally, the handout recommends avoiding the use of RCRI for vascular surgeries given the evidence that it underestimates risk in those instances. Further research remains to be done to determine if there are other surgical procedures where one risk tools predicts outcomes more accurately than the others. Our hope is that by offering both a template for how risk assessments should appear and dot phrases for both the RCRI and MICA scores, EHR documentation will become more uniform, comprehensive, and accurate. We plan to perform future studies to determine if this effort reduces heterogeneity, standardizes how notes appear in the EHR, and even effects the ordering of collateral testing such as stress tests, echocardiograms, or post-operative high-sensitivity troponin assays, which was beyond the scope of this initial study.

After this study, there were multiple meetings held by the consult team to consider potentially mandating the use of one risk assessment tool to ensure strict uniformity in assessment practices. The group decided to not mandate the use of one assessment tool given individual providers having greater familiarity with or preference for one or the other of the three tools.

In conclusion this single-center retrospective study uncovered significant variation in approaches to surgical risk stratification. Although RCRI, MICA, and ACS-SRC are all approved by the ACC/AHA, we are concerned that this degree of heterogeneity in consult notes unnecessarily complicates patient care, inhibiting effective communication with surgical providers. The results of this analysis are spurring evolving efforts to standardize perioperative assessments and clarify documentation.

Author Contribution

All Authors have reviewed the final manuscript prior to submission. All the authors have contributed significantly to the manuscript, per the ICJME criteria of authorship.

-

Substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data for the work; AND

-

Drafting the work or revising it critically for important intellectual content; AND

-

Final approval of the version to be published; AND

-

Agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved

Disclosures/Conflicts of Interest

None.

Funding

Not applicable.

Acknowledgements

We would like to thank the Section of Hospital Medicine at the University of Chicago including David Meltzer, Anton Chivu, Matthew Cerasale, and Elizabeth Murphy.

Corresponding author

John P Murray MD

Director of the Hospital Medicine consult service,

Clinical Associate of Medicine,

Section of Hospital Medicine at The University of Chicago,

Chicago, IL, USA

Email: murrayjp8@bsd.uchicago.edu